The absurdity of software patents: a personal example

When I learnt about free software some time ago, I came to believe that software patents were a very insidious threat. It was dangerous that someone could claim exclusivity over an algorithm: it could stifle innovation, it could be an obstacle to independent free software implementations of useful standards, etc. I very much hoped that Europe would keep them at bay, and still remember when the corresponding directive was rejected in 2005, giving us the relative security that we now enjoy in the EU in comparison with other countries such as the USA.

Since then, some of my work about speech recognition while interning at Google New York has been turned into a US patent application that was recently published. I was paid a patent bonus by Google and assisted them to fix problems in drafts of the application. This process has given me more elements to form an opinion about software patents in the US, and I have changed my mind accordingly. I no longer believe that they are an insidious threat. I now believe them to be a blatant absurdity.

I invite anyone doubting this fact to skim through the application and see for themselves. Just for fun, here are some selected bits.

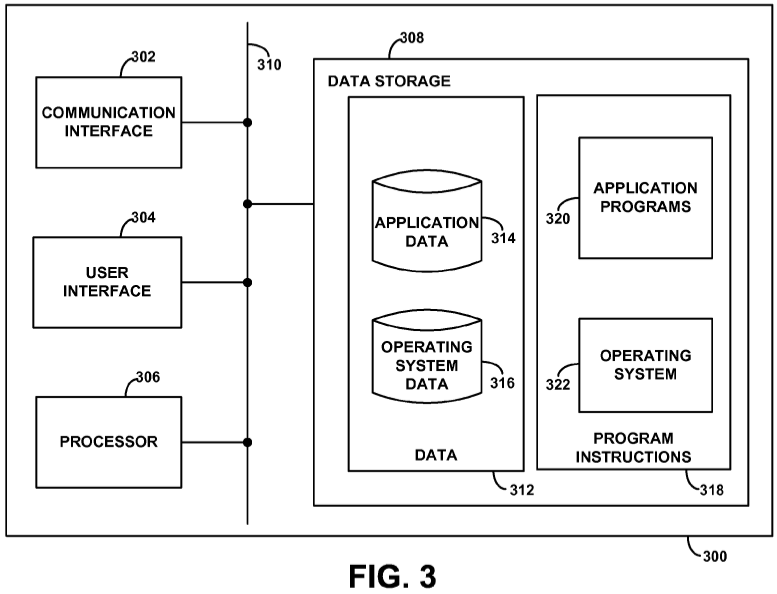

Our tour begins with a wall of boilerplate on pages 2 to 4 that describes mundane banalities about computers in a stilted prose that makes a surreal attempt at complete and exhaustive generality. (Remember that the topic is speech recognition, with printers and light bulbs being key components of such systems, as we all know.)

[0058] User interface 304 may function to allow client device 300 to interact with a human or non-human user, such as to receive input from a user and to provide output to the user. Thus, user interface 304 may include input components such as a keypad, keyboard, touch-sensitive or presence-sensitive panel, computer mouse, trackball, joystick, microphone, still camera and/or video camera. User interface 304 may also include one or more output components such as a display screen (which, for example, may be combined with a presence-sensitive panel), CRT, LCD, LED, a display using DLP technology, printer, light bulb, and/or other similar devices, now known or later developed. User interface 304 may also be configured to generate audible output(s), via a speaker, speaker jack, audio output port, audio output device, earphones, and/or other similar devices, now known or later developed. In some embodiments, user interface 304 may include software, circuitry, or another form of logic that can transmit data to and/or receive data from external user input/output devices. Additionally or alternatively, client device 300 may support remote access from another device, via communication interface 302 or via another physical interface (not shown).

It seems that you have to describe the overall context of the invention from scratch, even though it implies you have to repeat the same platitudes in every single application. In case you are wondering about those numbers peppered throughout, they are actually pointers to a helpful figure that wouldn't look out of place in a 1990 monograph about computer architecture:

Let us now move on to the gruesome sight of mathematical language being contorted and twisted to fit this strange mode of expression. The bulk of the application reads like a scientific paper eerily addressed to lawyers from the past. Here is an example from page 11:

[0162] As noted above, the search graph may be prepared by pushing the weights associated with some transitions toward the initial state such that the total weight of each path is unchanged. In some embodiments, this operation provides that for every state, the sum of all outgoing edges (including the final weight, which can be seen as an E-transition to a super-final state) is equal to 1.

Confused? Here is the beginning of the conclusion, that wraps things up in a surprisingly inconclusive way. I think the point is to make a desperate appeal to the reader to use their common sense, but I'm not sure.

[0192] The above detailed description describes various features and functions of the disclosed systems, devices, and methods with reference to the accompanying figures. In the figures, similar symbols typically identify similar components, unless context indicates otherwise. The illustrative embodiments described in the detailed description, figures, and claims are not meant to be limiting. Other embodiments can be utilized, and other changes can be made, without departing from the spirit or scope of the subject matter presented herein. It will be readily understood that the aspects of the present disclosure, as generally described herein, and illustrated in the figures, can be arranged, substituted, combined, separated, and designed in a wide variety of different configurations, all of which are explicitly contemplated herein.

The forced marriage between legalese and theoretical computer science jargon reaches its peak in the claim list. Reproducing it in full here would be both inconvenient and intolerable, but here is the general flavor:

An article of manufacture including a computer-readable medium, having stored thereon program instructions that, upon execution by a computing device, cause the computing device to perform operations comprising: selecting n hypothesis-space transcriptions of an utterance from a search graph that includes t>n transcriptions of the utterance...

It might just be me, but I find this outlandish prose pretty fascinating. Now, consider that this 32-page double-column document only describes a few simple ideas from a four-month undergraduate internship. Yet, it took over a year to produce it, and then two additional years to get the application published. God knows when the patent will be granted... it takes on average 31.7 months for the USPTO to process a software patent application (see Table 4 p149 of the USPTO's 2014 report), which isn't surprising given the load that they are under and the complexity of what they are supposed to do with applications. This wouldn't matter so much if this system didn't have dire consequences for innovators. It should be fixed. Really. The patent was finally granted on September 1st, 2015.

I am happy that this patent application was published, because at least it provides some public documentation about things I did while at Google. However, my opinion about software patents is still that they should not be given any legal credit. My advertising this document is because it describes work that I did; the point of this post is to stress that I am not endorsing the US software patent system. Also, my point here is not to criticise the people at Google who wrote this document (and are probably just following the rules of the game), or to blame Google for filing such applications (they presumably do it because everyone else does).

I hope that no one will run into trouble because of the existence of this patent. I fortunately think it is quite unlikely. If someone does, though, I put the blame on US law for ascribing any meaning whatsoever to this farce.